The End of the Playlist: Why the Future of Content is Generative, Not Curated

We used to search for content. Soon, content will find us. Or rather, it will be created for us. From SunoAI to Endel, we are moving from a world of "Discovery" to a world of "Contextual Generation."

We are entering the era of "Zero-Prompt" media. The feed won't just guess what video you want to watch; it will generate it based on your heart rate.

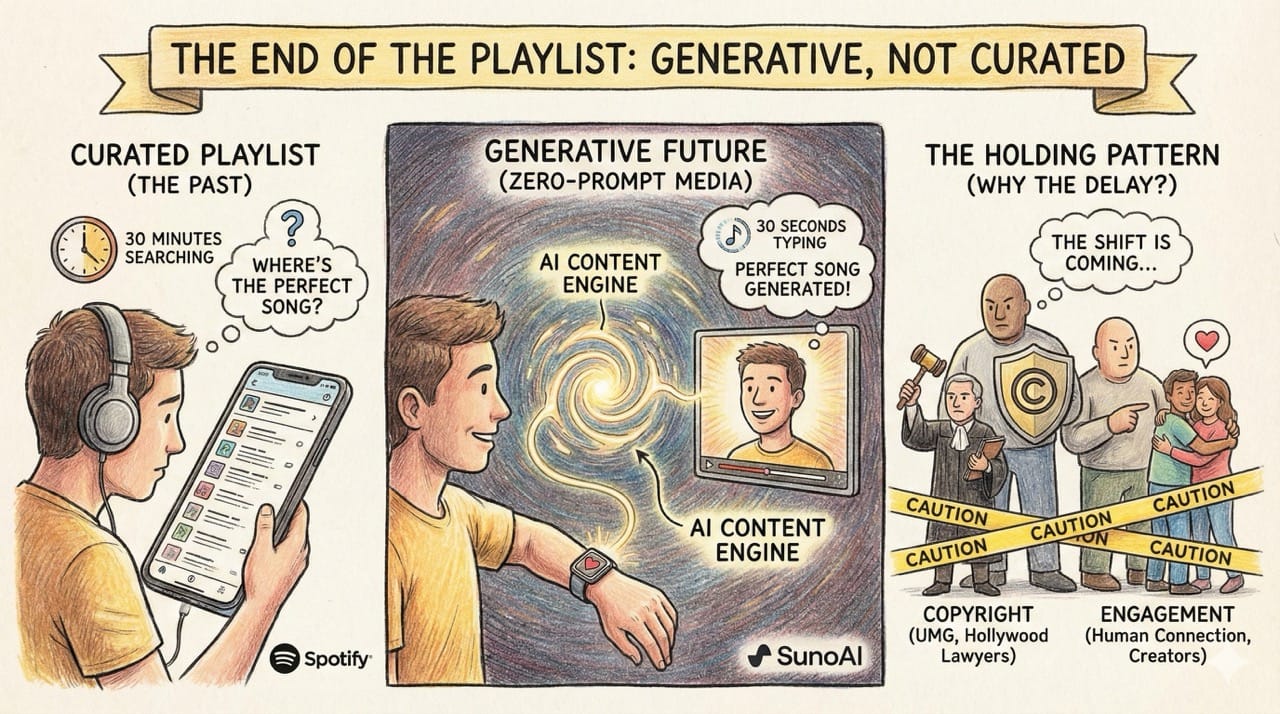

Inspiration: Trying SunoAI for the first time and realizing that finding the perfect song took 30 seconds of typing, not 30 minutes of searching Spotify.

Generative AI has exploded in the last two years. But the Hyperscalers (Meta, Google) have been cautious.

They focused on text and video generation tools, but they haven't flooded their feeds with AI content yet.

Why? Two reasons:

- Copyright: They are avoiding the napalm of Universal Music Group and Hollywood lawyers.

- Engagement: Currently, human connection (creators) still drives the best retention. They don't want to break the "Social" in Social Media.

But this is a holding pattern. The shift is coming.

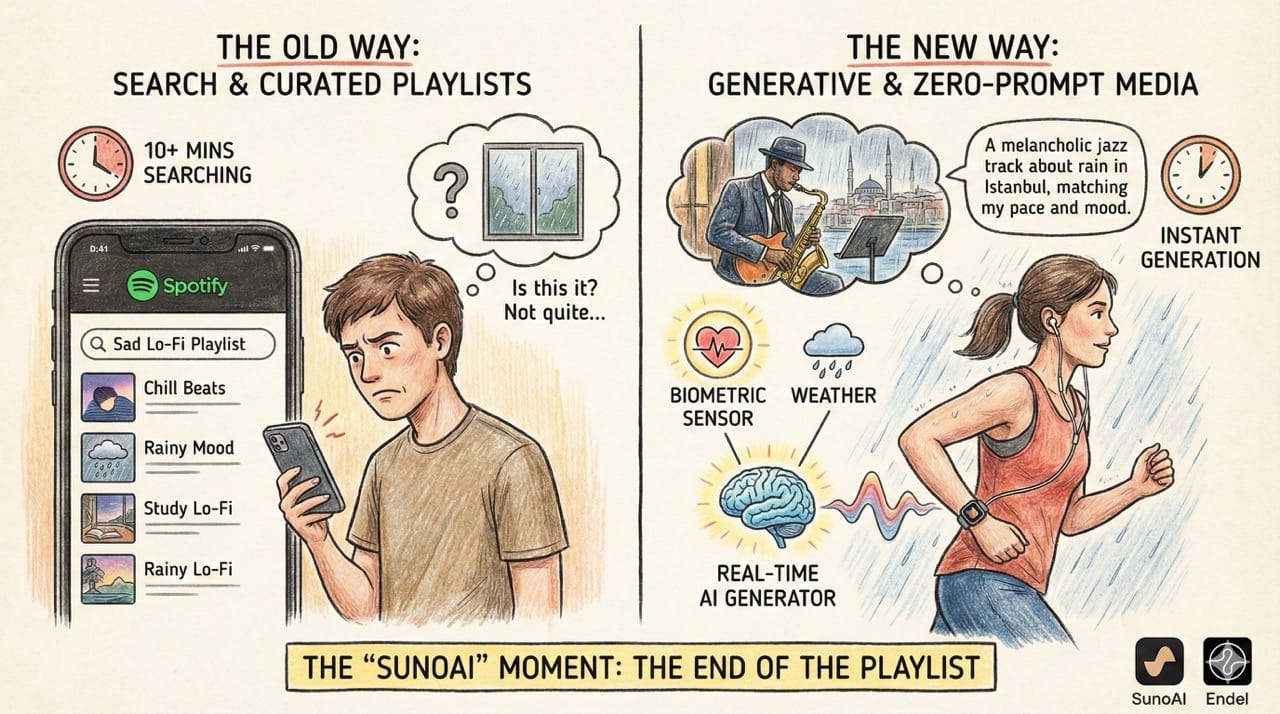

The "SunoAI" Moment

If you haven't tried SunoAI, do it. It’s frictionless. You type "A melancholic jazz track about rain in Istanbul," and 10 seconds later, you have a radio-quality song.

This kills the "Search" paradigm.

Why would I search Spotify for a "Sad Lo-Fi Playlist" curated by a stranger, when I can generate a track that matches my exact mood, location, and activity?

The Future of Music Curation: It won't be a playlist. It will be a stream. You won't even need to type a prompt. Your phone already knows you are running (accelerometer), it knows it's raining (weather app), and it knows your heart rate is 150 bpm (Apple Watch). The output will be a seamless, never-ending track generated in real-time to match your cadence perfectly.

Endel proved this works. Their algorithmic soundscapes are massive because they are functional. AI Music will do the same for entertainment.

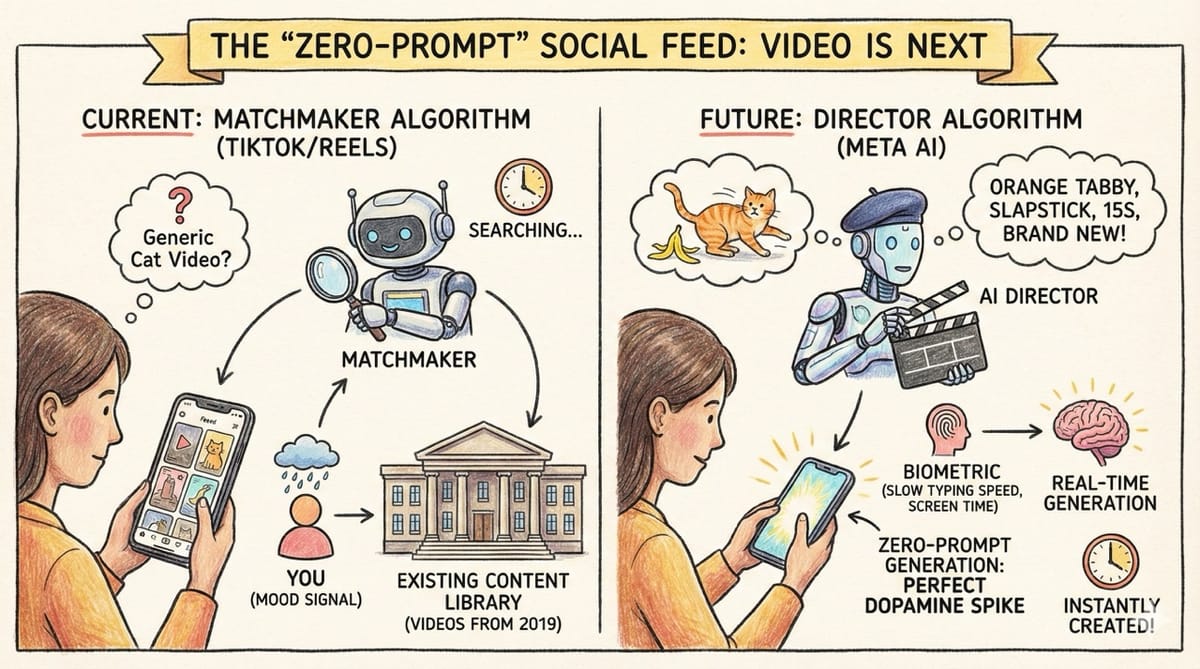

The "Zero-Prompt" Social Feed

Video is next. Right now, the TikTok/Reels algorithm is a Matchmaker. It matches you with existing videos. In the future, the algorithm becomes a Director.

Imagine opening Instagram when you are feeling down (the algorithm already knows this based on your typing speed and screen time). Instead of finding a funny cat video from 2019, Meta AI generates a new one.

It knows you specifically like orange tabbies and slapstick humor. It generates a 15-second clip that has never existed before, perfectly tailored to spike your dopamine in that exact moment. This is "Zero-Prompt Generation." The user provides the signal (mood); the AI provides the content.

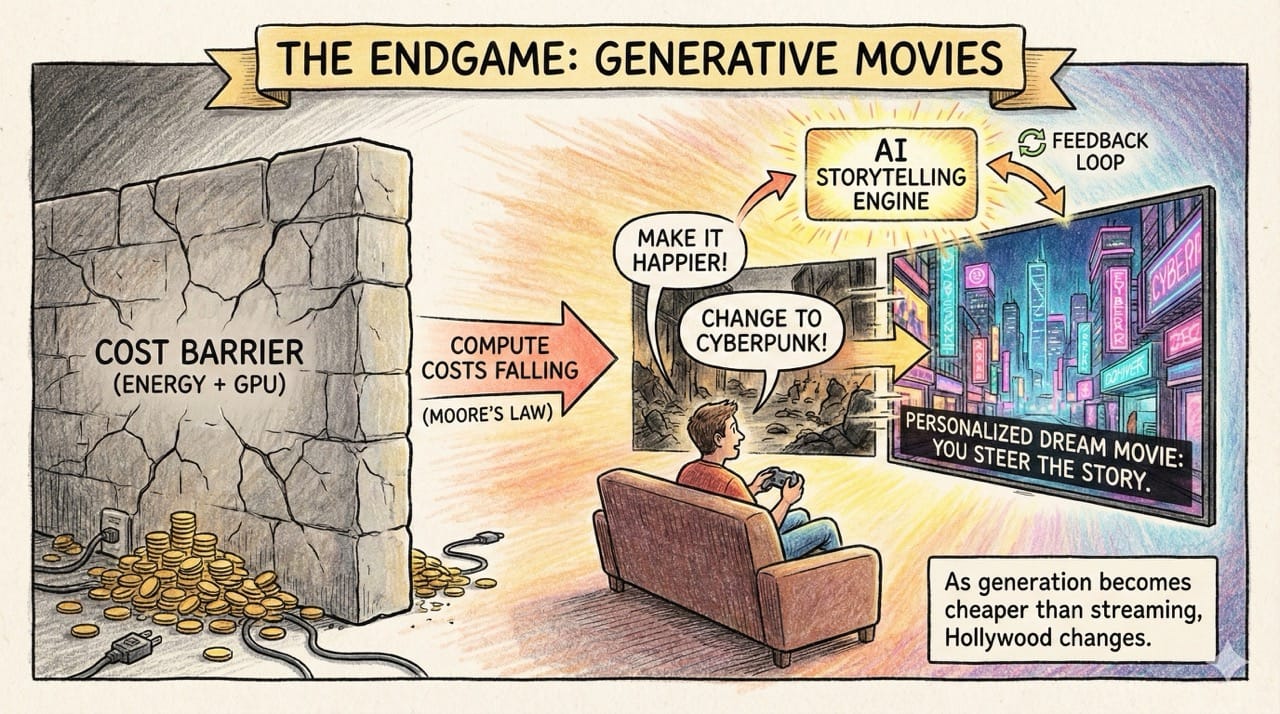

The Endgame: Generative Movies

Why aren't we there yet? Cost. Rendering video tokens is expensive (energy + GPU).

But compute costs are falling (Moore’s Law). Once the cost of generating a pixel drops below the cost of streaming a pixel, Hollywood changes.

We will see Generative Movies. You won't just watch a movie; you will steer it. You might ask the system to "make the ending happier" or "change the setting to Cyberpunk." As AI storytelling improves with feedback loops, the movie becomes a personalized dream.

I believe Netflix tried something similar with Black Mirror: Bandersnatch. But a more relevant example is video games like Detroit: Become Human and GTA V.

Speaking of Netflix, they will need to capitalize on this trend faster than social media platforms to not lose the finite attention the market has.

While the intuition is that a viewer will probably enjoy a narrative they can't anticipate more, when you give more autonomy and provide a wide variety of endings, retention and viewership rates go up.

The real magic? People also share these multiple endings for free on social channels, and return to the 'product' to enjoy it again and again.

Now, imagine if we reach this reality in a timeline where Meta Quest headsets are also widely adopted... The immersiveness will be unbelievable.

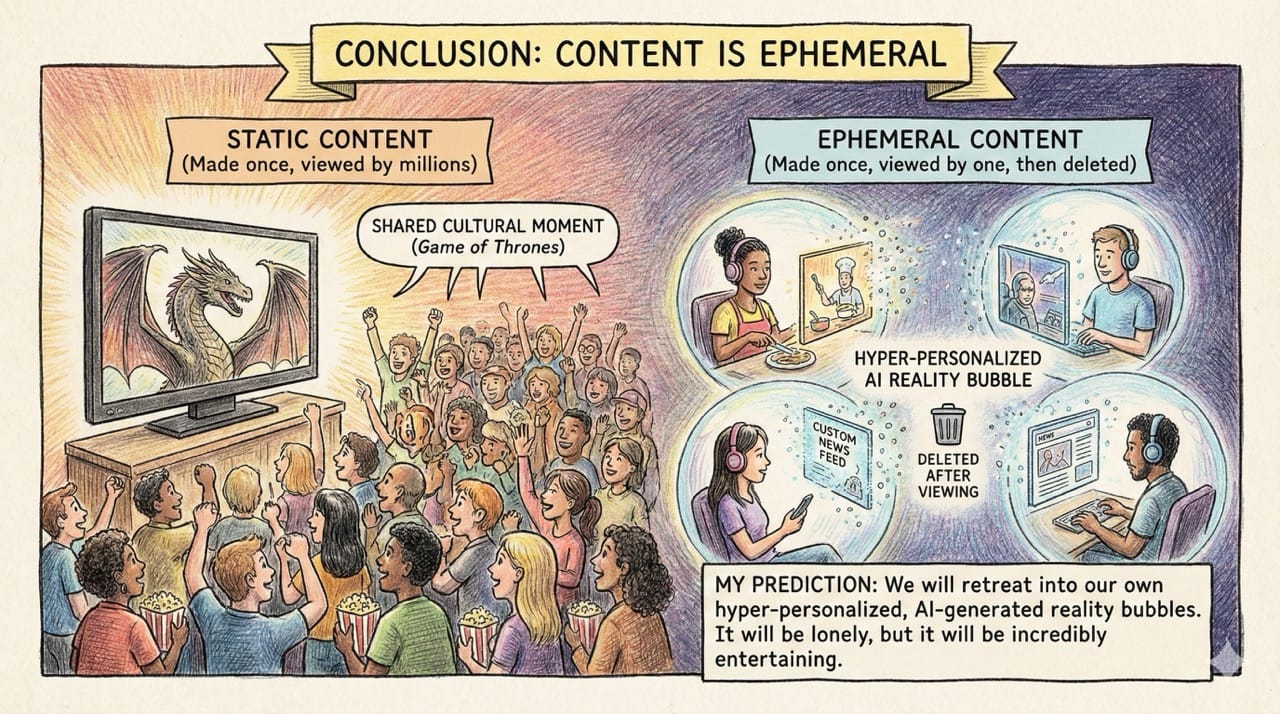

Conclusion: Content is Ephemeral

We are moving from Static Content (made once, viewed by millions) to Ephemeral Content (made once, viewed by one, then deleted).