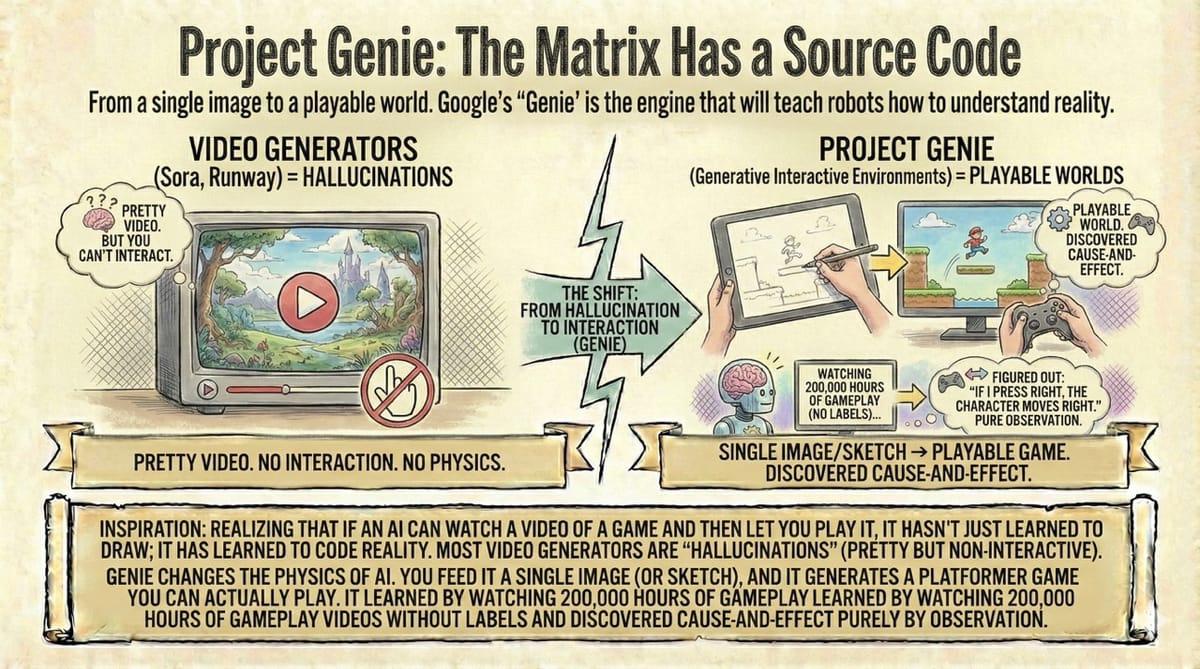

Project Genie: The Matrix Has a Source Code

We thought AI was learning to draw. We were wrong. It is learning to simulate. Genie isn't a game engine; it is the bootloader for AGI.

From a single image to a playable world. Google’s "Genie" isn't just a video generator; it is the engine that will teach robots how to understand reality.

Inspiration: Realizing that if an AI can watch a video of a game and then let you play it, it hasn't just learned to draw; it has learned to code reality.

Most video generators (Sora, Runway) are "hallucinations." They make a pretty video, but you can't interact with it. It’s a movie.

Genie 3 (Generative Interactive Environments) changes the physics of AI. It creates playable worlds.

The workflow is the ultimate creative stack:

- Dream it: You use Nano Banana Pro to generate a "Canvas" (a static image of a world, a character, or a concept).

- Play it: Genie 3 ingests that canvas and instantly builds a physics engine around it.

It learned by watching 200,000 hours of gameplay videos without labels. It figured out "If I press right, the character moves right" purely by observation. It discovered cause-and-effect.

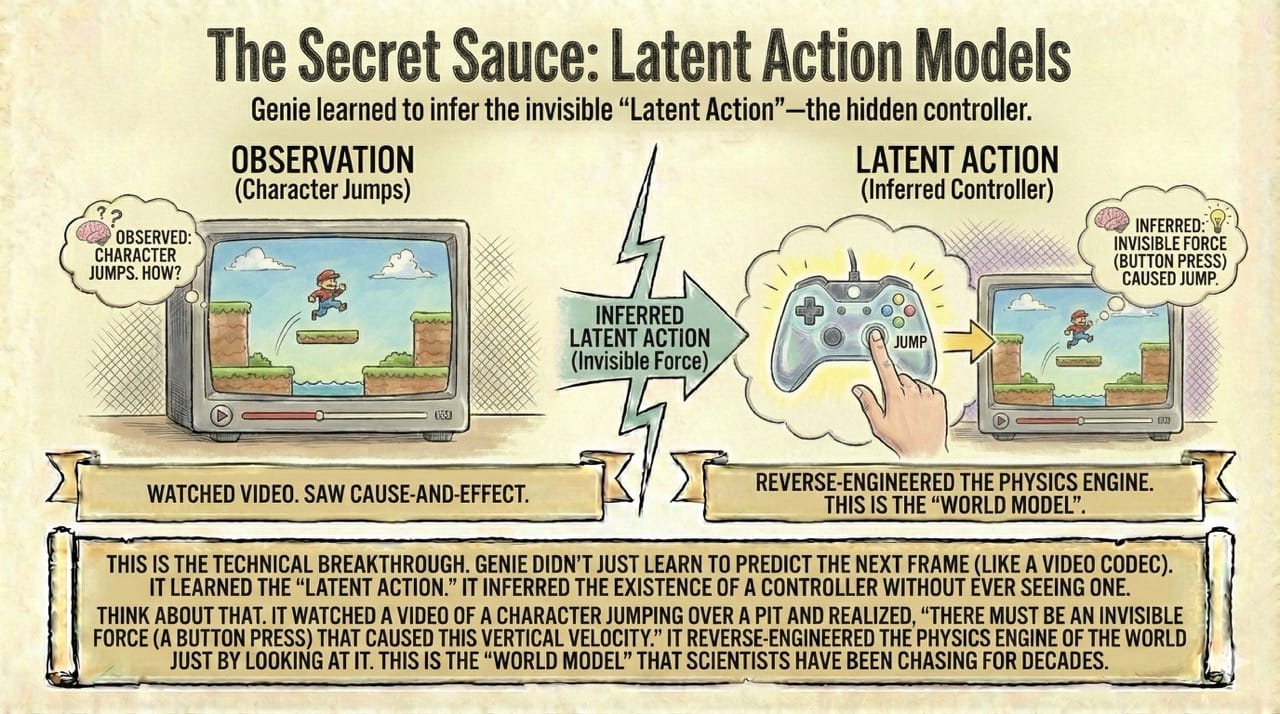

The Secret Sauce: Latent Action Models

This is the technical breakthrough. Genie didn't just learn to predict the next frame (like a video codec). It learned the "Latent Action."

It inferred the existence of a controller without ever seeing one.

Think about that. It watched a video of a character jumping over a pit and realized, "There must be an invisible force (a button press) that caused this vertical velocity."

It reverse-engineered the physics engine of the world just by looking at it. This is the "World Model" that scientists have been chasing for decades.

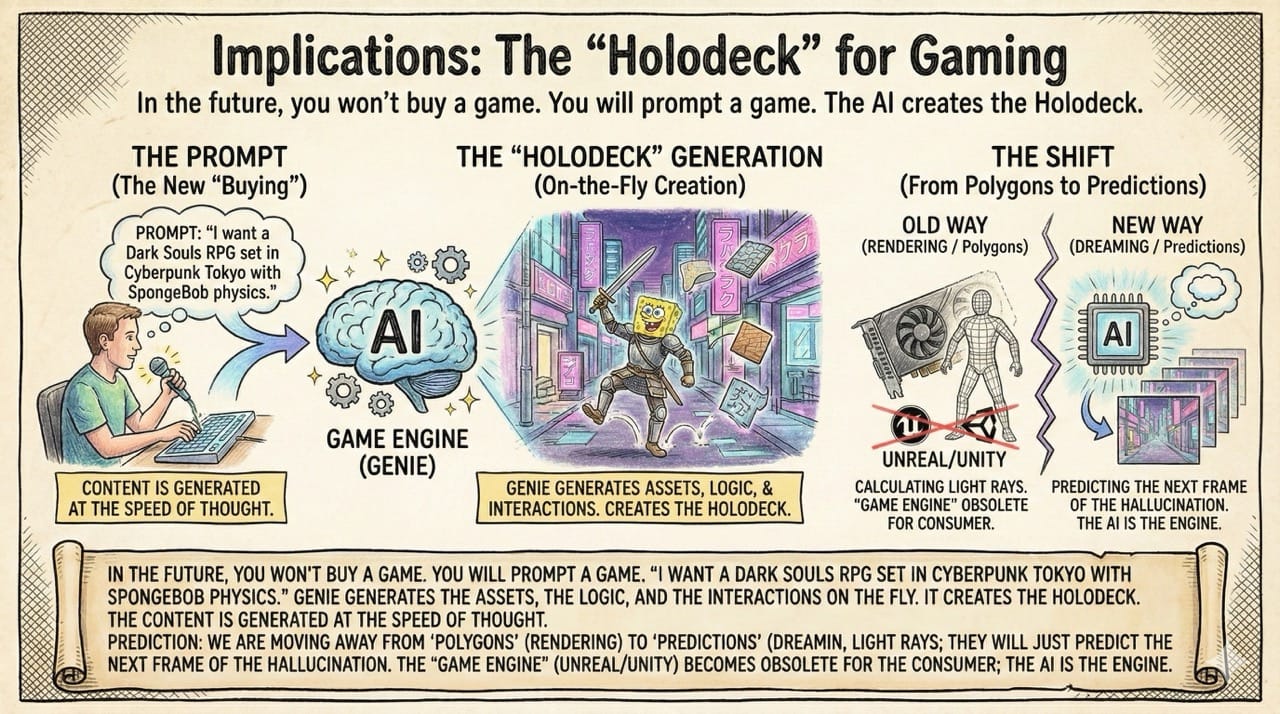

Implications: The "Holodeck" for Gaming

In the future, you won't buy a game. You will prompt a game.

"I want a Dark Souls RPG set in Cyberpunk Tokyo with SpongeBob physics."

Genie generates the assets, the logic, and the interactions on the fly. It creates the Holodeck. The content is generated at the speed of thought.

Prediction: We are moving away from "Polygons" (rendering) to "Predictions" (dreaming). Future graphics cards won't calculate light rays; they will just predict the next frame of the hallucination. The "Game Engine" (Unreal/Unity) becomes obsolete for the consumer; the AI is the engine.

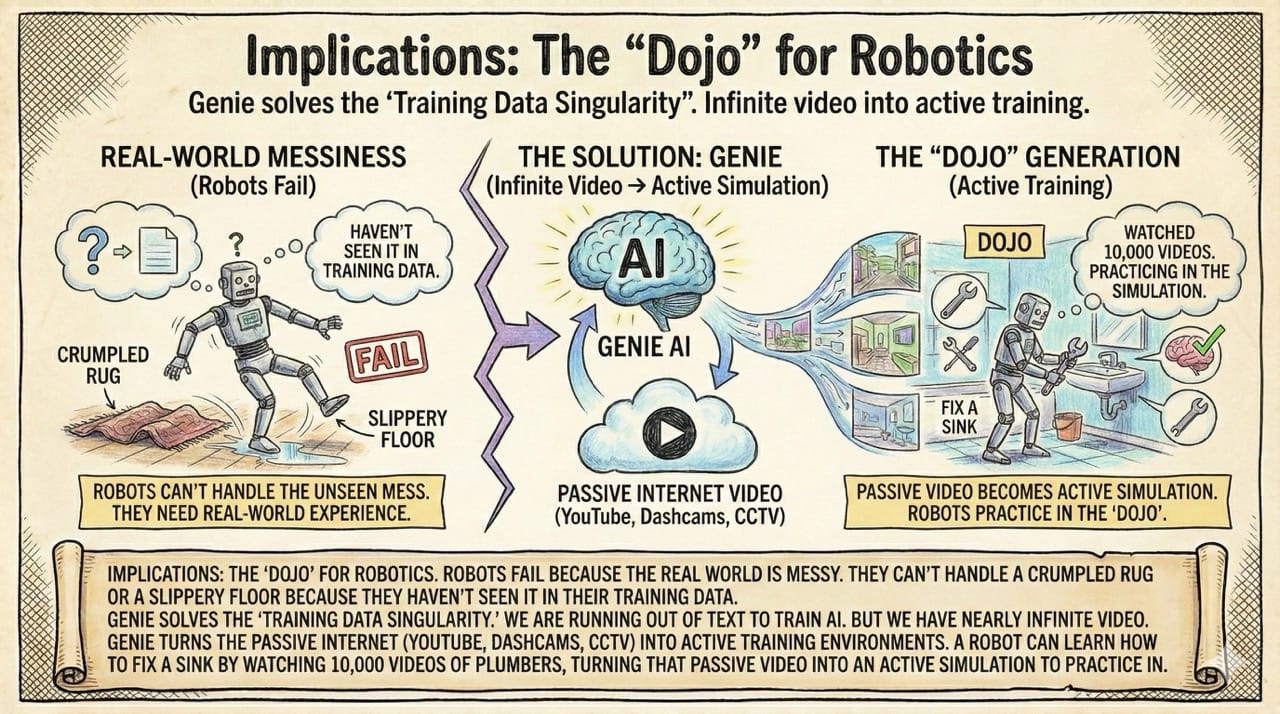

Implications: The "Dojo" for Robotics

Robots fail because the real world is messy. They can't handle a crumpled rug or a slippery floor because they haven't seen it in their training data.

Genie solves the "Training Data Singularity."

We are running out of text to train AI. But we have nearly infinite video. Genie turns the passive internet (YouTube, Dashcams, CCTV) into active training environments.

A robot can learn how to fix a sink by watching 10,000 videos of plumbers, turning that passive video into an active simulation to practice in.

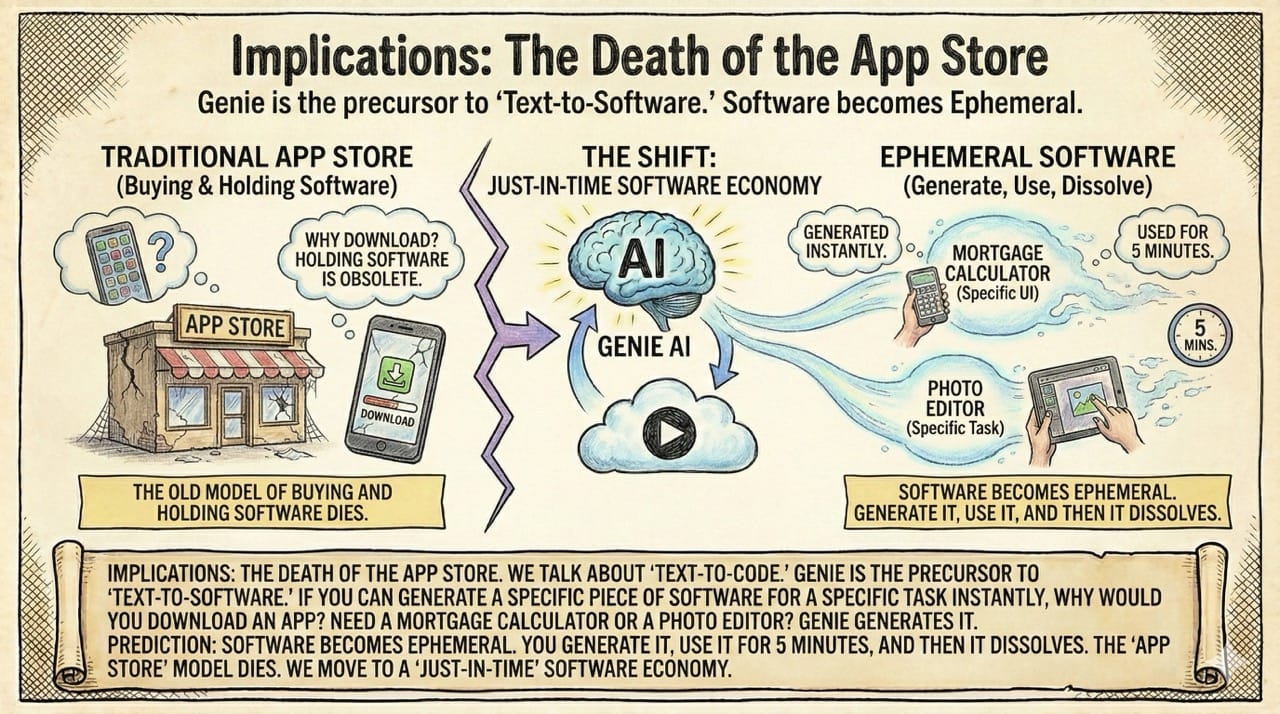

Implications: The Death of the App Store

We talk about "Text-to-Code." Genie is the precursor to "Text-to-Software."

If you can generate a specific piece of software for a specific task instantly, why would you download an app?

- Need a mortgage calculator with a specific UI? Genie generates it.

- Need a photo editor for one specific task? Genie generates it.

Prediction: Software becomes Ephemeral. You generate it, use it for 5 minutes, and then it dissolves. The "App Store" model of buying and holding software dies. We move to a "Just-in-Time" software economy.

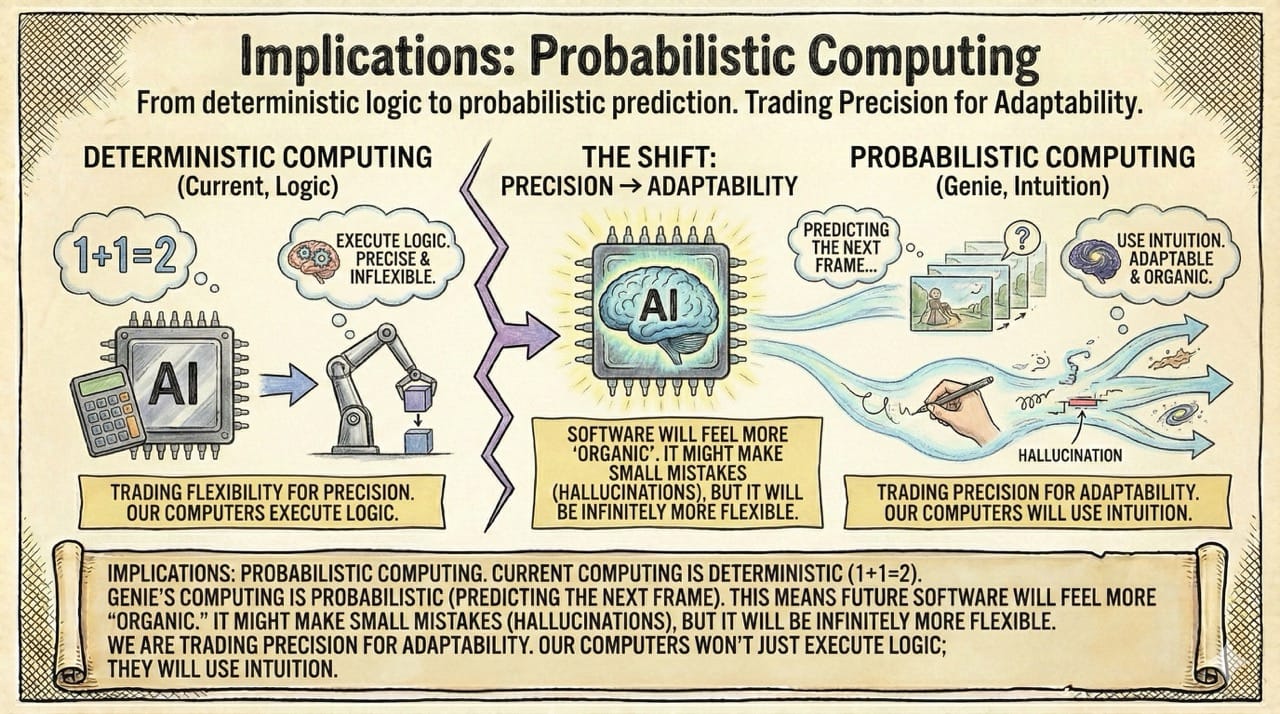

Implications: Probabilistic Computing

Current computing is deterministic (1+1=2). Genie's computing is probabilistic (predicting the next frame).

This means future software will feel more "organic." It might make small mistakes (hallucinations), but it will be infinitely more flexible.

We are trading Precision for Adaptability. Our computers won't just execute logic; they will use intuition.

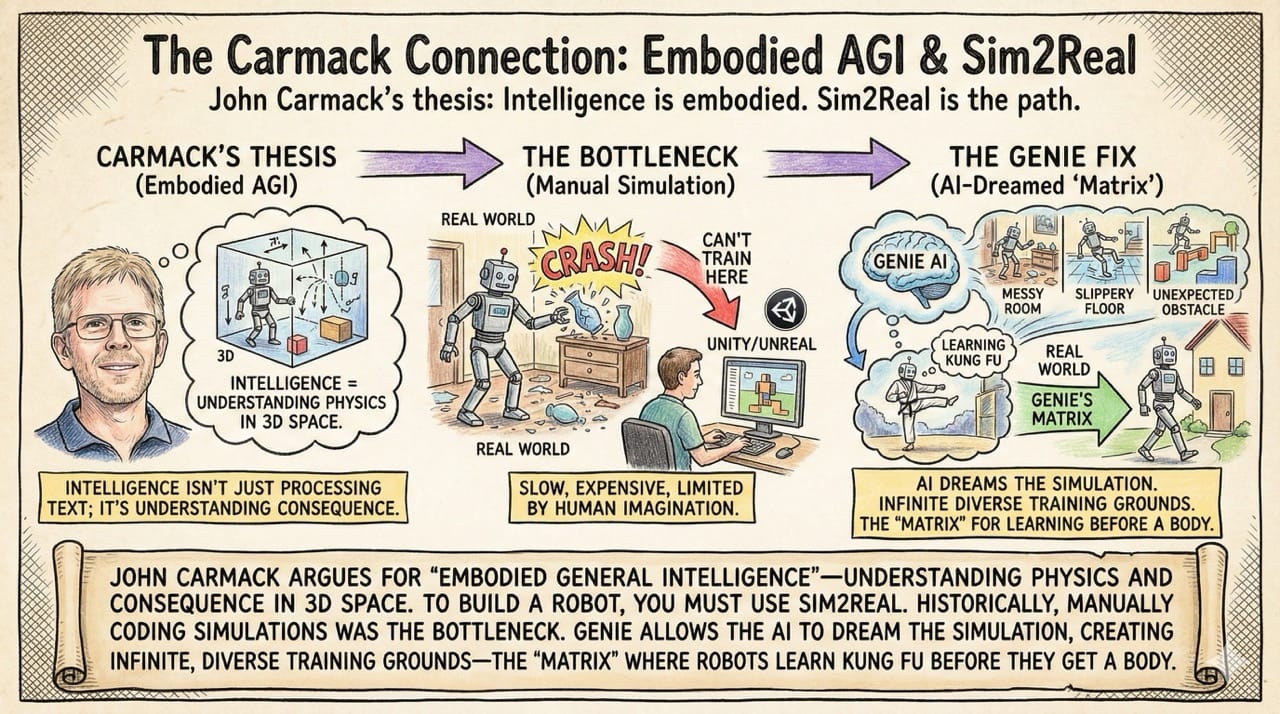

The Carmack Connection: Embodied AGI & Sim2Real

This aligns perfectly with what John Carmack (creator of Doom, now founder of Keen Technologies) has been arguing for years.

His thesis for AGI is "Embodied General Intelligence." Intelligence isn't just processing text; it's understanding physics, gravity, and consequence in a 3D space.

To build a robot that can walk in your house, you have to train it. But you can't train it in the real world (it breaks things). You have to use Sim2Real—training in a Simulation, then transferring to Reality.

The Bottleneck: Historically, humans had to manually code these simulations (using Unity or Unreal). It was slow, expensive, and limited by human imagination.

The Genie Fix: Genie allows the AI to dream the simulation. It generates infinite, diverse training grounds (messy rooms, slippery floors, unexpected obstacles) instantly. It provides the "Matrix" where the robots learn kung fu before they get a body.

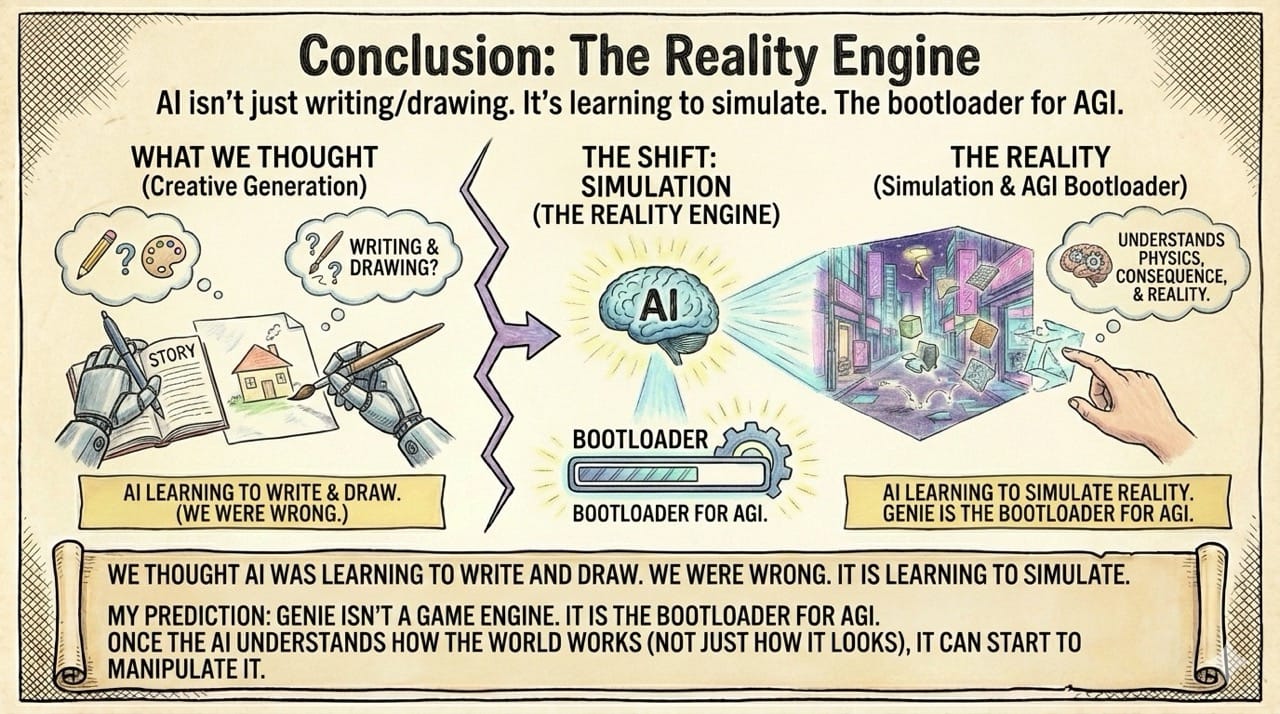

Conclusion: The Reality Engine

We thought AI was learning to write and draw. We were wrong. It is learning to simulate.

My Prediction: Genie isn't a game engine. It is the bootloader for AGI. Once the AI understands how the world works (not just how it looks), it can start to manipulate it.