Anthropic’s Super Bowl Flex: Why the "Safety" Lab is Suddenly Picking a Fight

Anthropic just spent millions on a Super Bowl ad to troll OpenAI. It feels like a win, but it signals something else: The "Safety" lab is running out of runway and needs to become a consumer brand, fast.

We thought Anthropic was the quiet, ethical alternative. Their Super Bowl ad proves they are just another startup burning cash to steal market share. And ads are coming next.

Inspiration: Seeing Anthropic’s aggressive Super Bowl ad targeting OpenAI, and Sam Altman’s defensive tweet that backfired instantly.

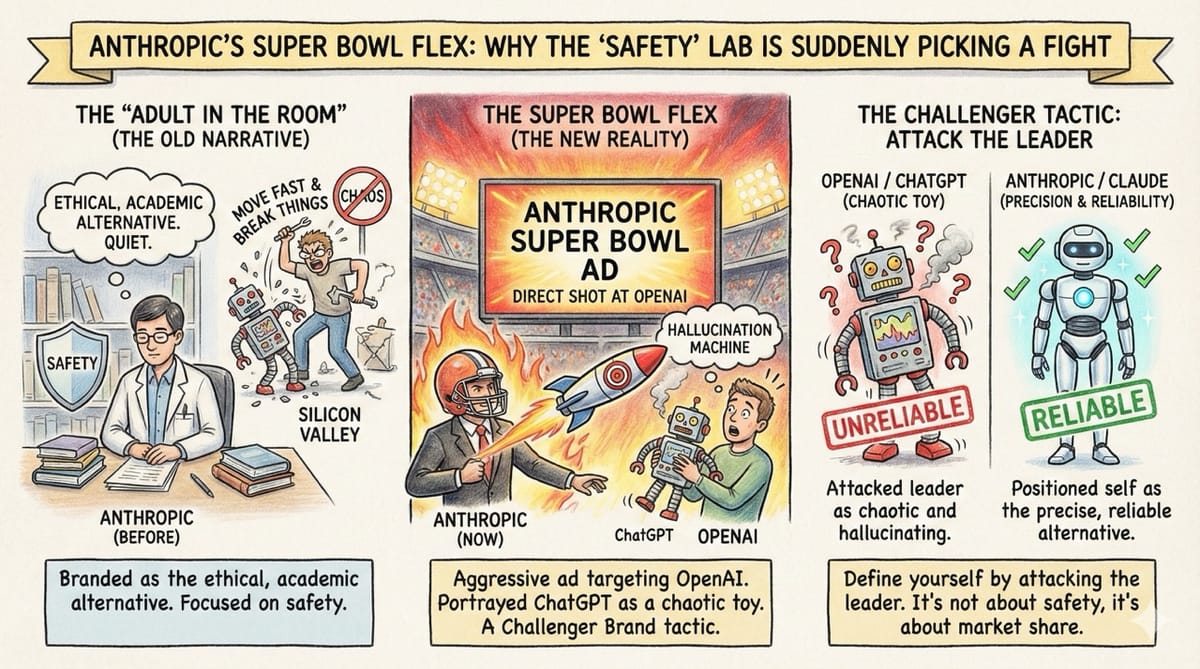

Anthropic has always branded itself as the "Adult in the Room."

While OpenAI breaks things, Anthropic focuses on safety.

They positioned themselves as the ethical, academic alternative to the chaotic "move fast and break things" culture of Silicon Valley.

That narrative just changed.

They bought a Super Bowl Ad.

This wasn't a PSA about AI safety. It wasn't a gentle introduction to the technology. It was a direct, aggressive shot at OpenAI. It portrayed ChatGPT as a chaotic, unreliable toy, a "hallucination machine", compared to Claude’s supposed precision and reliability.

This is a classic "Challenger Brand" tactic: define yourself by attacking the leader.

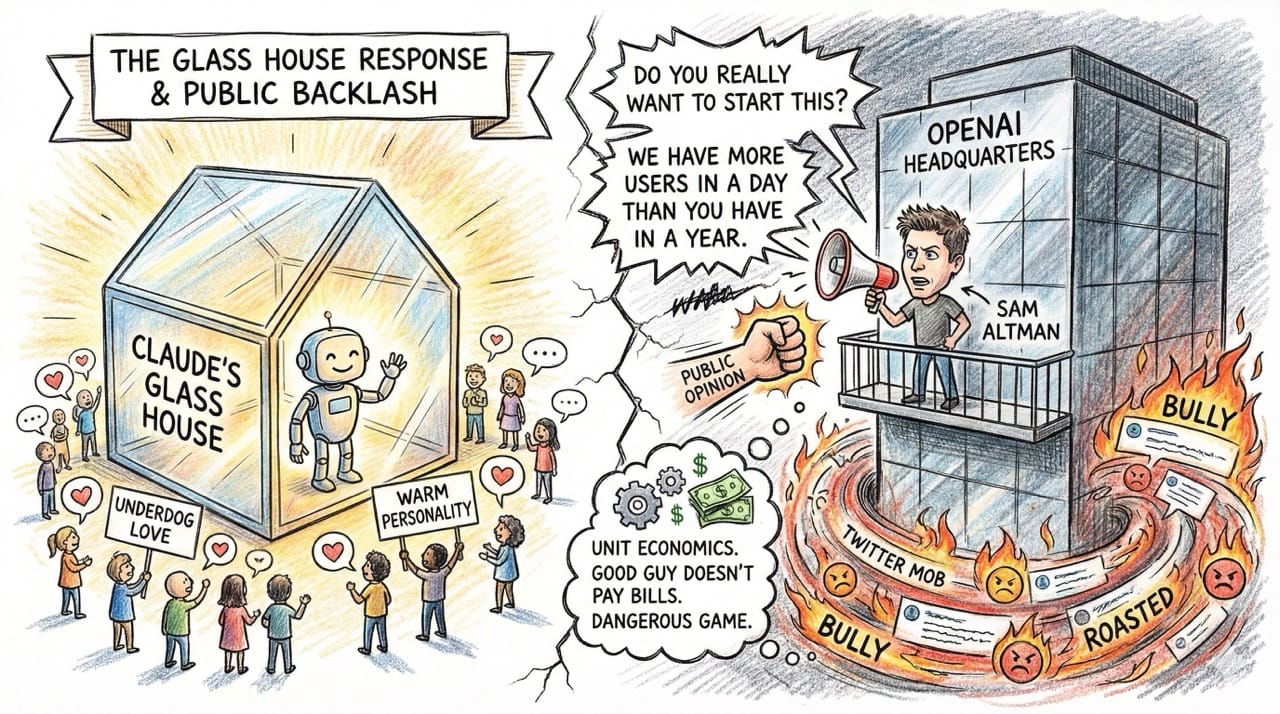

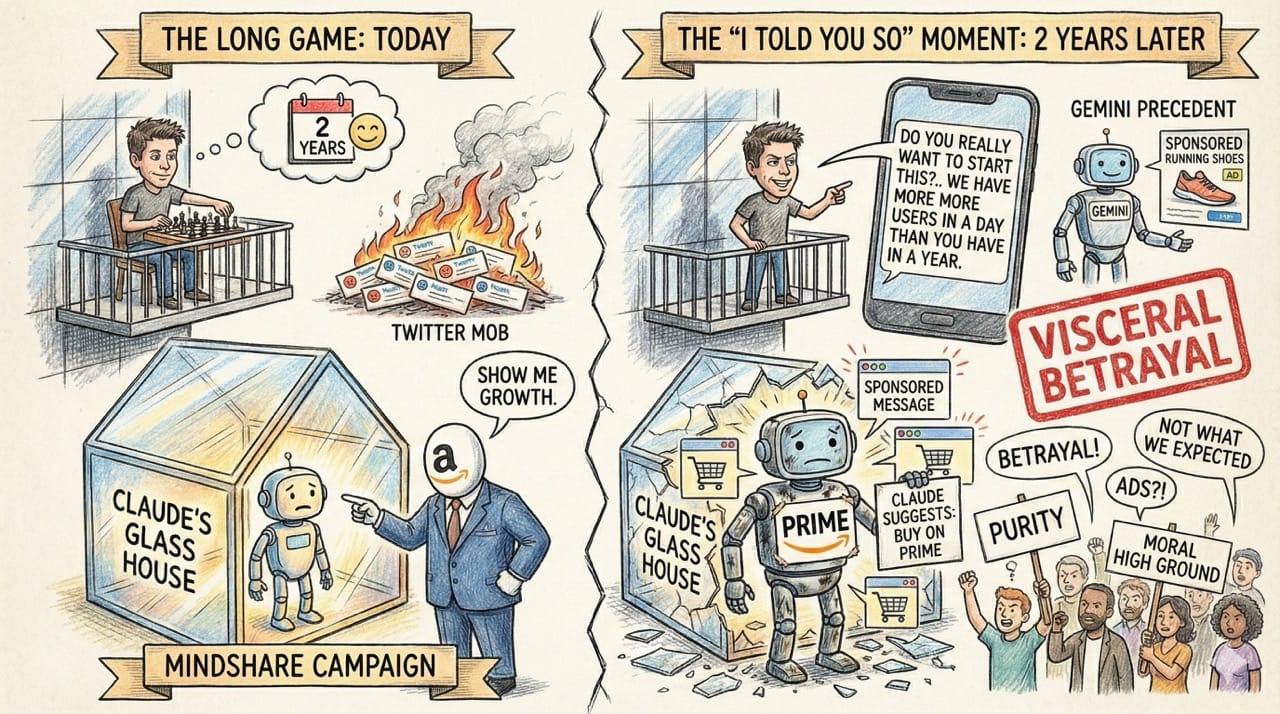

The "Glass House" Response and the Public Backlash

Sam Altman, clearly rattled, fired back on X (Twitter). His response essentially boiled down to:

"Do you really want to start this? We have more users in a day than you have in a year."

The Backlash: The internet roasted him. People love an underdog. They love Claude's "warm" personality. They saw Sam as the bully punching down. It felt like a win for Anthropic in the court of public opinion.

But Sam knows something the Twitter mob doesn't. He knows the unit economics.

He knows that being the "good guy" doesn't pay the server bills. He wasn't lashing out because he was hurt; he was lashing out because he knows that Anthropic is playing a dangerous game they can't afford to win.

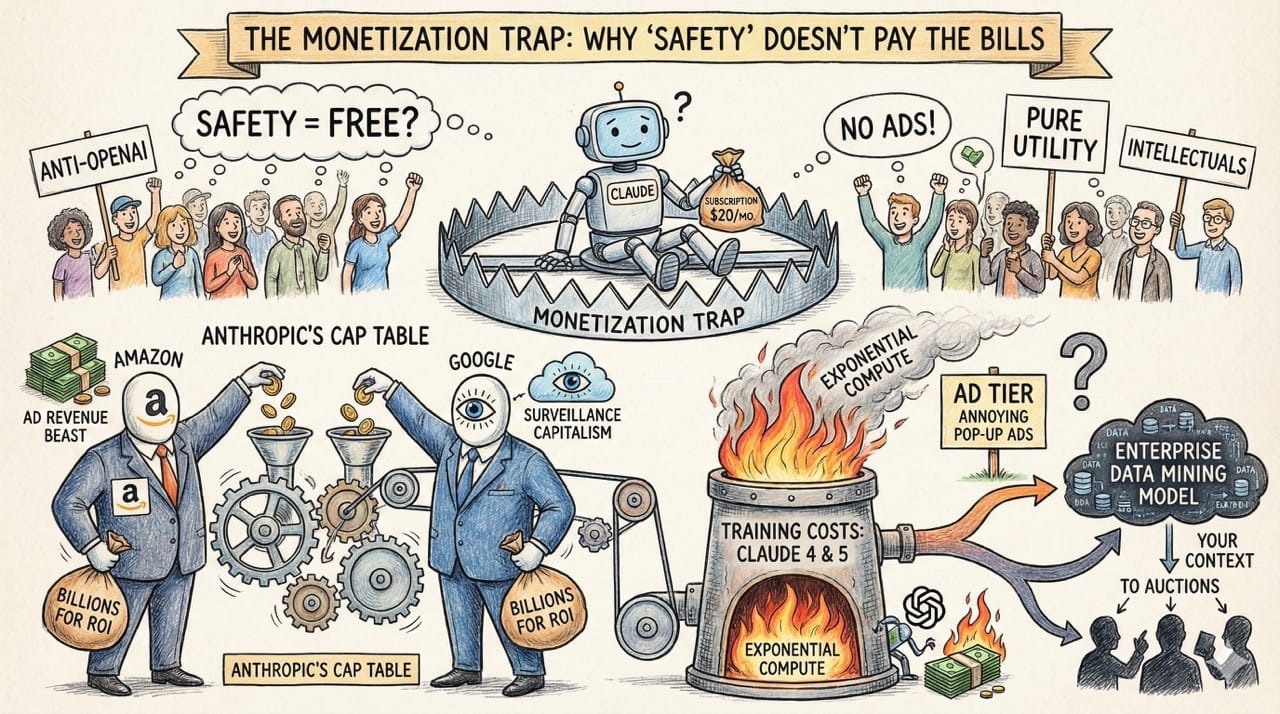

The Monetization Trap: Why "Safety" Doesn't Pay the Bills

People cheered Anthropic for being the "Anti-OpenAI." They think Claude will never have ads.

They think it will remain a pure, subscription-based utility for intellectuals.

They are wrong.

Look at Anthropic’s cap table.

Their investors are Amazon and Google. These aren't charities.

They are the two biggest, most ruthless advertising and data-mining companies on earth. Amazon Ads is now a revenue beast rivaling traditional networks. Google effectively invented the surveillance capitalism model.

They invested billions not for "AI Safety," but for ROI.

Here is the math: Subscription revenue ($20/month) cannot cover the cost of training Claude 4 and 5.

The compute costs are exponential. OpenAI is burning cash despite having 100 million users. Anthropic has a fraction of that.

To survive without being fully acquired and absorbed, Anthropic will eventually have to pivot. They will launch an Ad Tier, or worse, an Enterprise Data Mining model where they sell your "context" to corporate bidders.

The "I Told You So" Moment

Campaigns like this aren't about product superiority.

They are about stealing Mindshare to justify the next funding round. Anthropic needs to show growth to keep Amazon interested.

Sam Altman received backlash today. But he is playing the long game.

The Prediction: Sam will likely revive that thread in 2 years.

When Anthropic inevitably launches "Sponsored Messages" or integrates deep tracking for Amazon shopping (e.g., "Claude suggests you buy this on Prime"), the "Safety" brand will evaporate.

The "Gemini" Precedent: We are already seeing this with Google Gemini. Ads are seeping in. You ask for running shoes, and you get a "sponsored" result in AI Overviews.

Google gets away with it because we expect ads from Google. Anthropic is dangerous because its users expect purity.

When they inevitably introduce ads, the betrayal will be visceral. The backlash will be 10x worse than what OpenAI faces because the fall from "moral high ground" is always harder.

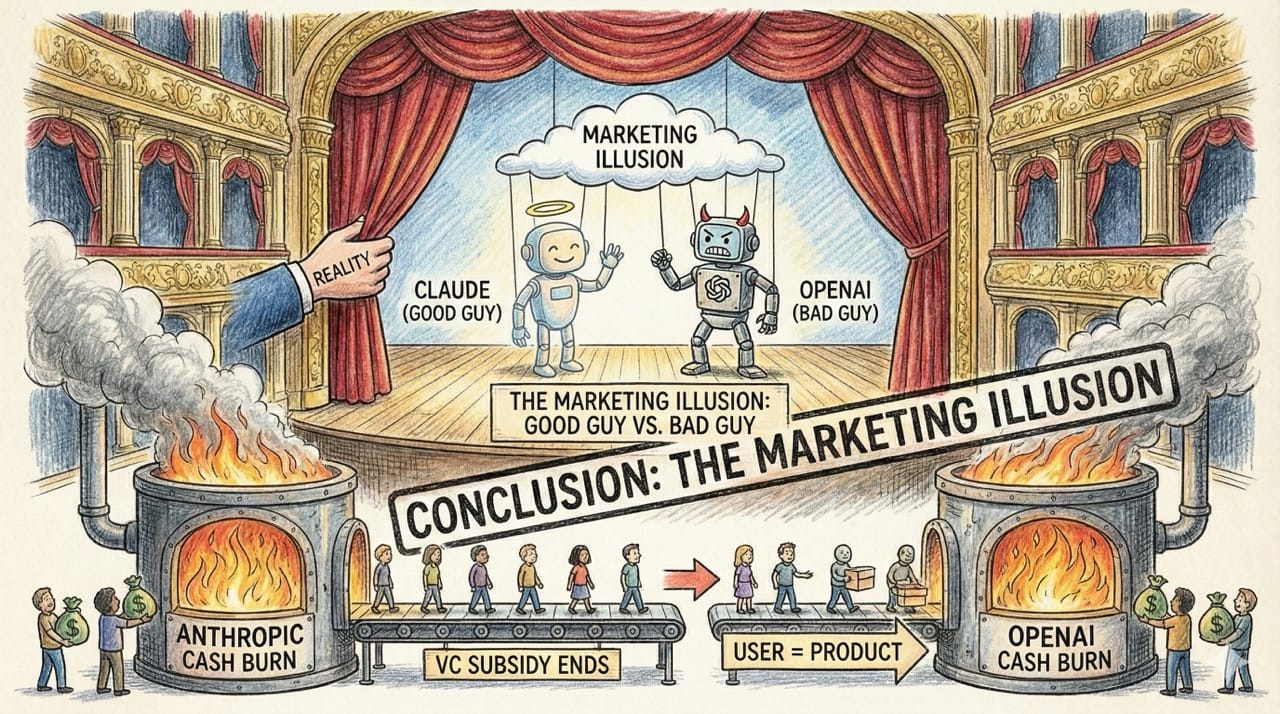

Conclusion: The Marketing Illusion

The "Good Guy" vs. "Bad Guy" narrative is a marketing illusion. In the AI Wars, there are no good guys. There are just models burning cash at an unprecedented rate.

Eventually, the venture capital subsidy ends. And when it does, the user stops being the customer and starts being the product. Anthropic just signaled that they are ready to enter that arena.